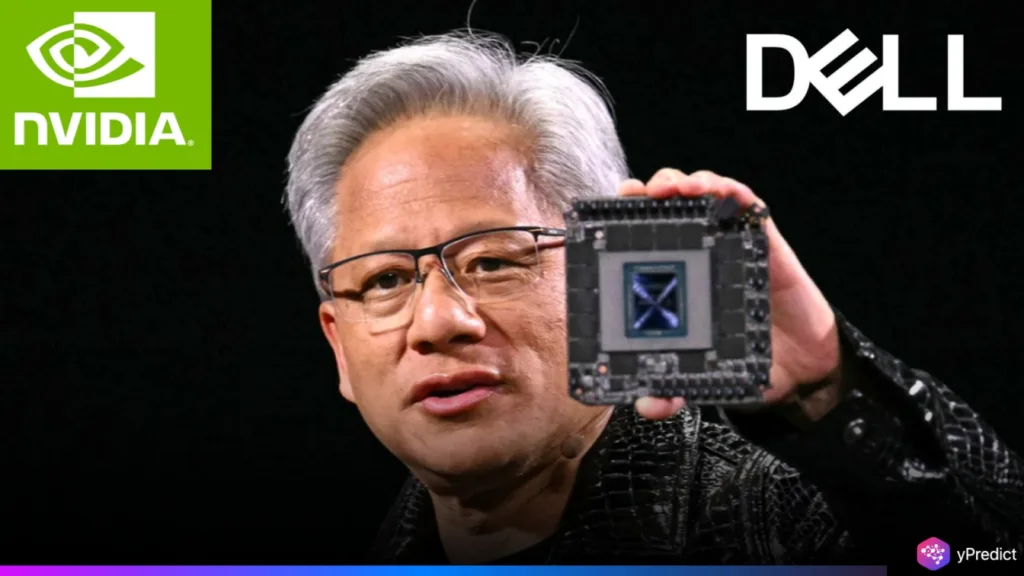

Dell has unveiled its latest AI servers with Nvidia GPUs, signaling a major shift in enterprise infrastructure. These next-gen systems are built to handle high-scale AI demands like generative AI and language model training. These next-generation servers are powered by Nvidia’s cutting-edge Blackwell Ultra GPUs.

Announced on May 19, 2025, via the official blog, these servers meet rising demand for scalable, high-performance AI infrastructure. They are built to support advanced workloads like generative AI, large language model training, and real-time inference at enterprise scale.

Designed for both air- and liquid-cooled environments, the new PowerEdge XE servers mark Dell’s deepening collaboration with NVIDIA and position the company as a key enabler in accelerating AI adoption across global enterprises.

Dell’s Strategic Push into Enterprise AI Infrastructure

As AI adoption becomes a top priority for global enterprises, Dell is expanding its portfolio with fully integrated, high-performance AI server solutions. Dell’s new PowerEdge servers take center stage, offering air- and liquid-cooled options to suit various operational requirements. The PowerEdge XE9680L, XE9680, XE9640L, and XE9640 target generative AI workloads with dense configurations of 256 GPUs per rack. These servers use NVIDIA Blackwell Ultra GPUs, enabling training speeds up to four times faster than earlier PowerEdge generations.

Dell designed the PowerEdge XE9780 and XE9785 for smooth integration into existing data centers without disrupting current infrastructure. Their liquid-cooled versions, the XE9780L and XE9785L, optimize performance and efficiency for high-density, rack-scale deployments in advanced environments.

Dell claims its next-generation servers are purpose-built for LLM training, inference, and agentic reasoning across enterprise-scale AI workloads. These servers support up to 192 NVIDIA Blackwell Ultra GPUs, expandable to 256 within a Dell IR7000 rack configuration. Using 8-way NVIDIA HGX B300 architecture, they deliver up to four times faster training for large language models than predecessors.

High-Performance Hardware and Efficient Cooling

Servers are available in both air-cooled and liquid-cooled designs. The liquid-cooled XE9680L and XE9640L versions improve thermal management, lower power consumption, and increase system longevity. These models include Dell’s PowerCool™ technology to maximise energy efficiency.

Dell also unveiled the PowerEdge XE9680B, which features NVIDIA GB200 NVL72, a powerful configuration of 72 GPUs connected via NVLink, aiming to give up to 30X AI inference performance and 25X throughput improvement over previous models.

Moreover, Dell also launched the PowerEdge XE9712, which includes NVIDIA GB300 NVL72 GPUs for significantly higher rack-level efficiency. This platform has up to 50x higher inference output and a fivefold improvement in throughput, all while boosting energy efficiency with Dell’s innovative PowerCool technology.

Built for Future-Proof AI Environments

Alongside Blackwell GPUs, Dell’s servers support NVIDIA’s upcoming Vera CPUs and Vera Rubin architecture, enabling scalable, future-ready AI infrastructure. Integrated with NVIDIA AI Enterprise tools—like NeMo microservices, Llama-based models, and NIM inference engines—these systems support large-scale agentic AI deployments.

To meet AI’s rising data and connectivity demands, Dell expanded its networking lineup with PowerSwitch SN5600 and SN2201 Ethernet switches. Built on the NVIDIA Spectrum-X platform, these switches offer high performance and seamless integration for demanding enterprise environments. Dell also launched NVIDIA Quantum-X800 InfiniBand switches, delivering up to 800 Gbps throughput and supported by ProSupport and Deployment Services.

Furthermore, Dell and NVIDIA have deepened their collaboration with the Dell AI Factory, delivering end-to-end managed services for GenAI deployments. These solutions are optimized for container platforms like Red Hat OpenShift, enabling flexible hybrid deployments across data centers and cloud environments.

Conclusion

As AI infrastructure competition intensifies and production costs rise, Dell positions its solutions to drive profitability through networking and storage. The company aims to deliver strong value across its AI portfolio while sustaining growth in high-demand enterprise segments. Arthur Lewis, President of Dell’s Infrastructure Solutions Group, emphasized Dell’s focus on cost-effective systems that meet next-generation AI demands.

With this announcement, Dell hopes to strengthen its position as a key facilitator of enterprise AI, laying the groundwork for enterprises migrating to an AI-first world.