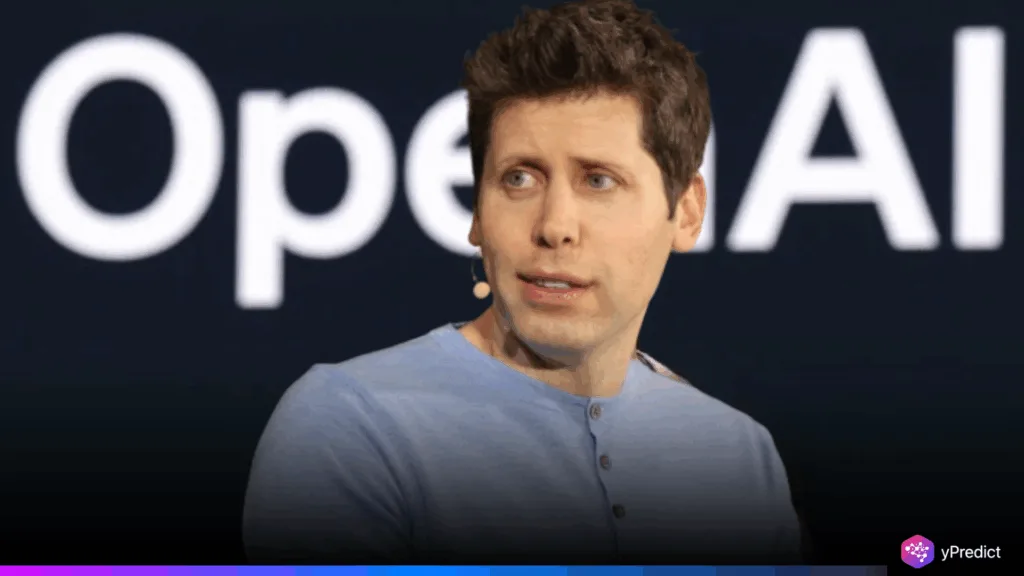

Artificial intelligence is quickly undermining traditional voiceprint authentication, a method widely used by banks to verify customers through speech. With the rise of deep learning and generative audio models, scammers can now clone voices using just a few seconds of audio. This makes it easier than ever to bypass security systems once thought secure. On July 22, 2025, OpenAI CEO Sam Altman gave a dire warning of this weakness. Terming voiceprint systems as being defeated completely and foreseeing imminent fraud disaster. There are emerging fears that AI-based fraud and abuse will soon surpass existing security measures, and his statement shows industry preoccupation with this.

AI Voice Cloning Tools Can Bypass Biometric Bank Security Systems

Voiceprint authentication identifies someone based on the characteristics of the voice/pitch, tone, and cadence. This has been due to its convenience and purported safety, particularly in phone-based transactions involving large financial values. Nonetheless, the fast advancement of AI technologies, including OpenAI GPT-4o and voice-generating GANs, has made the plausible cloning of voices simple and scalable. Fraudsters need a few audio samples to imitate the voice of a target and pass the authentication systems with success. This vulnerability is especially dangerous in the financial sector. According to security reports, fraud attempts in bank call centers now occur every 46 seconds.

More than half of these cases involve deepfake technology or generative AI. Hackers can impersonate clients, access sensitive accounts, and authorize large transactions using cloned voices. Despite these threats, many institutions still rely on voiceprint as a primary verification method. Sam Altman criticized this inertia, saying at a Federal Reserve banking conference, “AI has fully defeated voice authentication. It’s crazy to keep using it.” His comments mirror a growing consensus that existing biometric systems are no longer enough to protect high-value assets or personal data in an era of synthetic audio manipulation.

Banks Urged to Adopt Advanced, Multi-Layered Authentication Models

There is a rising risk of fraud, and the security consultants are calling upon banks to use multi-factor authentication. Voice recognition is used with other protections, such as one-time passwords, facial recognition, or behavioral biometrics. It provides important layers of protection. An example of such would be behavioral biometrics, which monitors the typing or the direction of the mouse movement. Which is hard to imitate by AI. Such tools improve verification precision and allow for monitoring suspicious activity in real time. The call for reform by Sam Altman was not a warning but a guideline.

He stressed that the point concerning actual innovation in verification of identity is critical, and it is more or less irresponsible to rely on aging methods such as voiceprint only. Other institutions, such as Pindrop, design software to detect obvious movements and distinguish between a human voice and one that AI generates, examining slight nuances in sound. Nevertheless, developers must continuously advance these systems as artificial intelligence models evolve at an alarming rate. Authorities also need to establish new standards for the financial industry, and they may soon need to provide more authentication standards.

Altman’s Warning Underscores Need for Urgent Action

The future of banking security is at a crossroads. As Sam Altman warned, voiceprint authentication has been overtaken by AI’s ability to synthesize human-like speech. With deepfake audio already causing real financial losses, institutions must act now. Layered security, continuous system updates, and intelligent fraud detection are no longer optional; they’re necessary to protect customers and financial networks. Relying on voice alone is no longer safe. Banks should adopt a thorough authentication system that fits an AI-saturated world to prevent a crisis of fraud cases. The faster they change, the more efficient they are at fighting emerging risks and compromising customer trust.